Nowadays, the voice user interface has become more and more accessible. Several technology giants like Apple, Google and Amazon already embeded the voice assistant in their product with a rational price. With the development of machine learning and Neural circuit in the chip. Voice recognition can be more precious, intuitive and closer to be able to perform human to human conversation. In this way, voice interaction has great potential to become a new dominant way of human computer interaction. This project is trying to looking for the problem which is going to happen on the UI/UX design for the voice interaction and provide solution.

"In voice user interfaces, you cannot create visual affordances. Consequently, looking at one, users will have no clear indications of what the interface can do or what their options are. At the same time, users are unsure of what they can expect from voice interaction, because we normally associate voice with communication with other people rather than with technology."

-Ditte Mortensen, How to Design Voice User Interface

"An Explanation created by users, usually highly simplified, of how something works"

-Don Norman Design of Everday Things

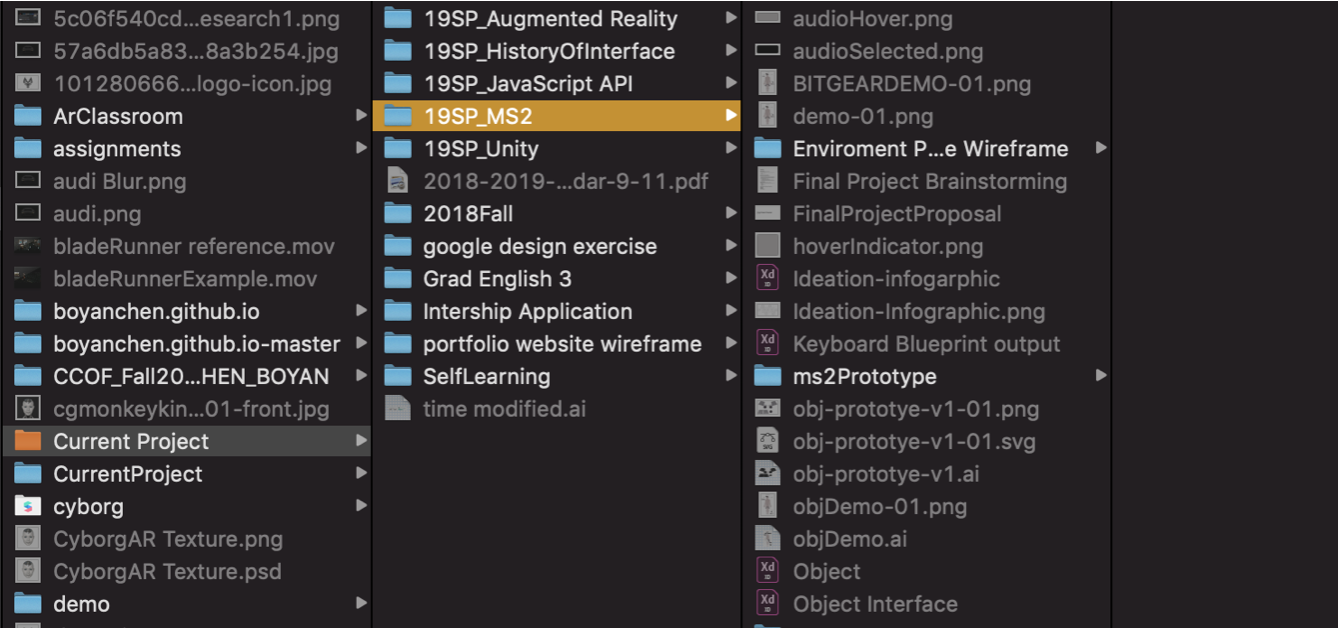

The traditional conceptual model is based on the click interaction of mouse. Or then "Enter" key on our keyboard. Both click and "Enter" is trying to form a conceptual model as a nested file structure as shown in the picture. In order to find the major studio file, I need to enter the current project file first. So that the conceptual model for the file system is like a nested branch, in computer's terminal, this is described as path. However, if we use voice interaction, we can go directly in to that folder just by saying its' name. So the conceptual model for voice interaction is supposed to be different. And if we can go to the folder or the file we want how could make the users know where am I right now? This is one of the problem that need to be solved in voice user experience building process.

Path:/Users/Ronnie/Desktop/Current\ Project/19SP_MS2

How voice as a mean of interaction will change the design of VUI in the perspective of typography and information architect, and how these changes will affect users' affordance and conceptual model for the conversational user experience of VUI

This is the very first stage of the project, and the main goal for this paper prototype is testing the conceptual model I just came up as an idea for voice interaction. I decide to prioritize the conceptual model than affordance is because that I think the conceptual model is like a blueprint for the user experience. It conveys how you are going to define the expectation of using the interface for the users. Once you make sure this model is working for massive users. Than you can consider what kind of object for this blueprint that is helpful to build this conceptual model be built in users' mind.

In order to narrow down the scope of research. I decide to narrow down to a specific context to use the voice interaction. And this context should be the frequently used one so that the tester are familiar with it. And I think it will be easier for them to talk about the difference. Finally I choose the web browser. Since it is the one of the most frequently used application in our daily life. Here are my choices and finally I decide to choose the web browsing experience.

Paper prototype

The conceptual model I came up with the voice user experience is computer as a servant which serve the content directly to the users. Previously the conceptual model for the users of interface is a "Desktop", a bunch of folders which contains the "content" we want to view. And there is no "servant" there to grab the content we want to use or see for the users need to look for them by themselves. To build this conceptual model, we have button which let us open something, menu to let us know what we can do , and pages or tabs to let us switch between different task. All of these purpose is optimizing our experience of searching. However, when the users start to use voice to communicate with the computer, we don't need to search by ourselves any more. Our voice is sending a command line for the computer and then let the computer execute our command for as. So that the users should be able to focus more on the content.

Home page

The result page of searching

Instead of changing in page, it is changing elements on the table. Just like servant is wiping out the empty beer bottle

The page just tell you what you previously seen and what sites had been shown

This is prototype is considered as an improved version for prototype#1 and continue test for the "voice assistance as a servent" idea in the prototype#1. Based on the feedback from the prototype#1, I made a interactive demo which is able to show the transition animation while users browse through different content on the voice browser. I also add a specific context of "searching upcoming event in NYC" as the goal of the whole prototype experience.

Intro and result page

In this prototype, I also test a more specific function by using voice command to search the content from the content directly. The users can search where is the location of NYC international auto show by asking. Than the google map will be trigger automatically to tell the information for the users. I think it will make a less disruptive experience for searching, since they don't have to switch on another page for finishing this kind of quick search task.

The search information pop up at the left